Closed

Description

I've been trying to get Pulsar to render the same output as P3D with perspective cameras and would very much appreciate if someone could take a look and point out an obvious misstep.

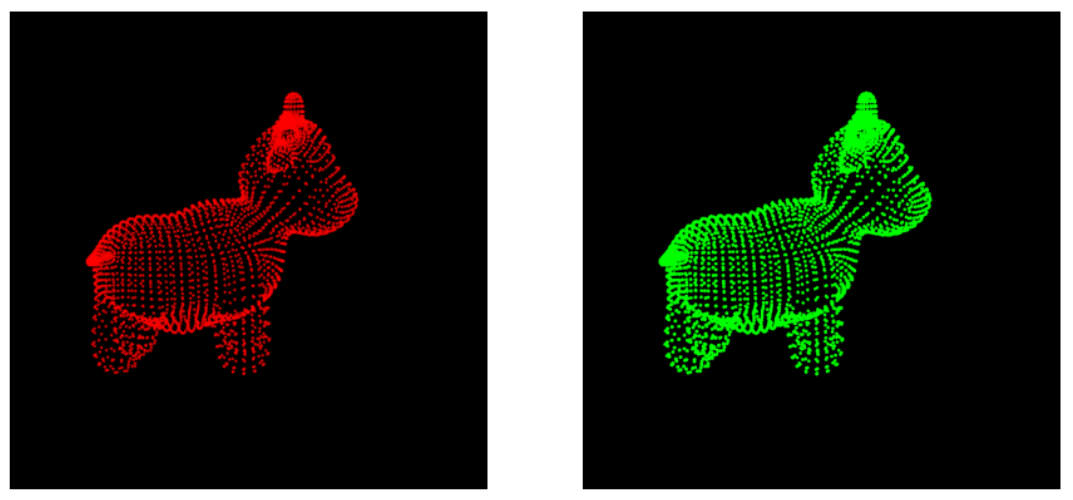

Below is an image of the output from FOVPerspectiveCameras for both Pytorch3d and Pulsar renderers:

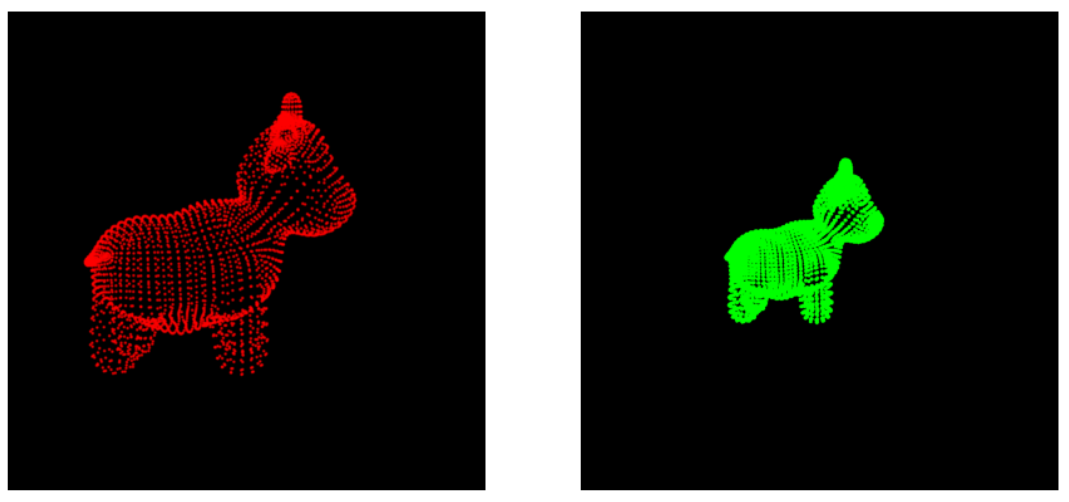

And here is the image generated when switching over to PerspectiveCameras

The following code is self contained and reproduces the above diagrams

import os

import sys

import torch

import pytorch3d

import torch.nn.functional as F

import matplotlib.pyplot as plt

# Util function for loading point clouds|

import numpy as np

# Data structures and functions for rendering

from pytorch3d.structures import Pointclouds

from pytorch3d.io import load_objs_as_meshes, load_obj

from pytorch3d.vis.plotly_vis import AxisArgs, plot_batch_individually, plot_scene

from pytorch3d.renderer import (

look_at_view_transform,

FoVOrthographicCameras,

PointsRasterizationSettings,

PointsRenderer,

PulsarPointsRenderer,

PointsRasterizer,

AlphaCompositor,

NormWeightedCompositor,

FoVPerspectiveCameras,

PerspectiveCameras

)

############## Mesh and Vertice creation

## setting up mesh with cute cow

!mkdir -p data/cow_mesh

!wget -P data/cow_mesh https://dl.fbaipublicfiles.com/pytorch3d/data/cow_mesh/cow.obj

!wget -P data/cow_mesh https://dl.fbaipublicfiles.com/pytorch3d/data/cow_mesh/cow.mtl

!wget -P data/cow_mesh https://dl.fbaipublicfiles.com/pytorch3d/data/cow_mesh/cow_texture.png

DATA_DIR = "./data"

obj_filename = os.path.join(DATA_DIR, "cow_mesh/cow.obj")

# Load obj file and grab points

device='cpu'

mesh = load_objs_as_meshes([obj_filename], device=device)

verts = mesh.verts_list()[0]

## [1x3] for p3d RGB

p3d_rgb = torch.zeros(len(verts),3).to(device)

p3d_rgb[:,0] = 1

## [1x4] for puls RGBA

puls_rgb = torch.zeros(len(verts),4).to(device)

puls_rgb[:,1] = 1

## red point clouds for p3d and green for pulsar

p3d_point_cloud = Pointclouds(points=[verts], features=[p3d_rgb])

puls_point_cloud = Pointclouds(points=[verts], features=[puls_rgb])

## rotation and translation convenience

R, T = look_at_view_transform(2.7, 0, 90)

## shared FOVperspective camera for both renderers

cameras = FoVPerspectiveCameras(znear=.1, device=device, R=R, T=T)

## rasterizers

p3d_raster_settings = PointsRasterizationSettings(

image_size=800,

radius = 0.008,

points_per_pixel = 10

)

################################

# radius is 10x bigger in pulsar

################################

puls_raster_settings = PointsRasterizationSettings(

image_size=800,

radius = 0.0008,

points_per_pixel = 10

)

p3d_rasterizer = PointsRasterizer(cameras=cameras, raster_settings=p3d_raster_settings)

puls_rasterizer = PointsRasterizer(cameras=cameras, raster_settings=puls_raster_settings)

## instantiate the renderers

p3d_renderer = PointsRenderer(

rasterizer=p3d_rasterizer,

compositor=AlphaCompositor()

)

## using the unified pulsar wrapper render

pulsar_renderer = PulsarPointsRenderer(

rasterizer=puls_rasterizer,

n_channels=4

).to(device)

p3d_image = p3d_renderer(p3d_point_cloud)

puls_image = pulsar_renderer(puls_point_cloud, gamma=(1e-5,),

bg_col=torch.tensor([0.0, 0.0, 0.0, 1.0],

dtype=torch.float32, device=device))

plt.figure(0, figsize=(15,15))

plt.subplot(1,2,1)

plt.axis('off')

plt.imshow(p3d_image[0, ..., :3].cpu().numpy())

plt.subplot(1,2,2)

plt.axis('off')

plt.imshow(puls_image[0, ..., :3].cpu().numpy())

plt.show()

############

## Excellent results for FOVPerspectiveCameras, next is PerspectiveCameras

## using camera intrinsics from the above FOV camera w/ defaults

K = cameras.compute_projection_matrix(

znear=.1,

zfar=100.0,

aspect_ratio=1.0,

fov=60.0,

degrees=True,)

## same R and T from above

pers_cameras = PerspectiveCameras(

K=K,

device=device,

R=R, T=T)

## rasterizers and renderers again

p3d_rasterizer = PointsRasterizer(cameras=pers_cameras, raster_settings=p3d_raster_settings)

puls_rasterizer = PointsRasterizer(cameras=pers_cameras, raster_settings=puls_raster_settings)

## instantiate the renderers

p3d_renderer = PointsRenderer(

rasterizer=p3d_rasterizer,

compositor=AlphaCompositor()

)

## using the unified pulsar wrapper render

pulsar_renderer = PulsarPointsRenderer(

rasterizer=puls_rasterizer,

n_channels=4

).to(device)

## pulsar renderer needs znear and zfar for perspectiveCameras

## get them from above

znear, zfar = cameras.znear, cameras.zfar

p3d_image = p3d_renderer(p3d_point_cloud)

puls_image = pulsar_renderer(puls_point_cloud, gamma=(1e-5,),

bg_col=torch.tensor([0.0, 0.0, 0.0, 1.0],

dtype=torch.float32, device=device,),

znear=znear, zfar=zfar)

plt.figure(1, figsize=(15,15))

plt.subplot(1,2,1)

plt.axis('off')

plt.imshow(p3d_image[0, ..., :3].cpu().numpy())

plt.subplot(1,2,2)

plt.axis('off')

plt.imshow(puls_image[0, ..., :3].cpu().numpy())

plt.show()